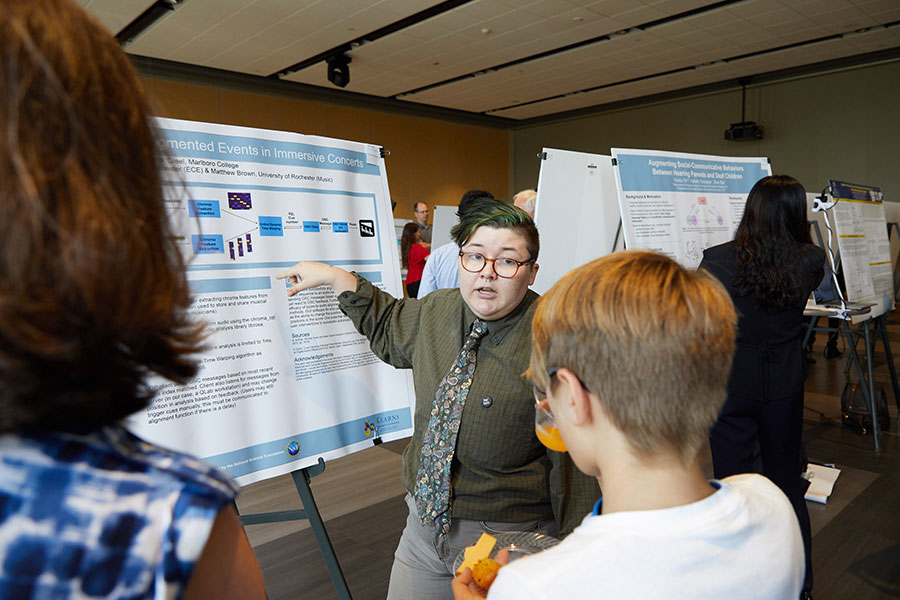

Computational Methods for Music, Media, and Minds REU

National Science Foundation Research Experience for Undergraduates (NSF REU)

Computational Methods for Understanding Music, Media, and Minds

How can AI facilitate inclusive and accessible communication for deaf and hard of hearing children? What can Twitter tell us about public perception of tobacco products? How does the brain respond to naturalistic musical performances?

These are some of the questions that students will investigate in our summer REU: Computational Methods for Understanding Music, Media, and Minds. Over ten weeks, students will explore an exciting, interdisciplinary research area that combines artificial intelligence, music, statistics, and cognitive science. Students will be supported with funding from the National Science Foundation and mentored by faculty members drawn from Computer Science, Brain and Cognitive Sciences, Music Theory, and Public Health.

PI

Ajay Anand, PhD

Deputy Director

Associate Professor

Goergen Institute of Data Science

Co-PI

Zhiyao Duan

Associate Professor

Department of Electrical and Computer Engineering, Department of Computer Science

We are awaiting a decision from NSF regarding funding for the Summer 2024 session and beyond. We will post updates as soon as possible.

You are eligible to apply if:

- You are a 1st, 2nd, or 3rd year full-time undergraduate student at a college or university.

- You are a U.S. citizen or hold a green card as a permanent resident.

- You will have completed two computer science courses or have equivalent programming experience by the start of the summer program.

We are unable to support international students via this federally-funded NSF REU program. If you are looking for self-funded research opportunities, you can reach out to one of our affiliated faculty members directly to discuss your research interests.

Being a computer science major or having prior research experience are not requirements. We seek to recruit a diverse group of students, with varying backgrounds and levels of experience. We encourage applications from students attending non-research institutions, and from students from communities underrepresented in computer science.

Before starting the application, you should prepare:

- An unofficial college transcript (a list of your college courses and grades), as a PDF file. Please include the courses you are currently taking.

- Your CV or resume, as a PDF file.

- A personal statement (up to 5000 characters), explaining why you wish to participate in this REU, including how it engages your interests, how the experience would support or help you define your career goals, and special skills and interests you would bring to the program.

- The name and email address of a teacher or supervisor who can recommend you for the REU.

How to Apply:

- Create an NSF ETAP account and fill out all portions of the registration.

- Select our REU program and follow the prompts to apply. The personal statement should explain why you wish to participate in this REU, including how it engages your interests, how the experience would support or help you define your career goals, and special skills and interests you would bring to the program.

- Apply online no later than January 29, 2023.

- Notification of acceptance will be communicated between March 5 and April 5, 2023.

The 2023 REU dates are Tuesday, May 23, 2023 to Friday, July 28, 2023.

Students accepted into the REU will receive:

- On-campus housing

- Meal stipend

- A stipend of $6000

- Up to $600 to help pay for travel to and from Rochester

Your experience will include:

- A programming bootcamp to help you learn and/or improve your programming skills in the Python language.

- Performing research with a team of students and faculty on one of the REU projects.

- Professional development activities, including graduate school preparation and career planning.

- Social events and experiences, including opportunities to meet and network with students from other REU programs and members of the University community.

The David T. Kearns Center coordinates summer logistics and activities for all REU programs across the University of Rochester campus, including travel, housing, the REU orientation and the undergraduate research symposium.

Visit our REU summer activities page for more detailed information on programs and events.

Visit our past REU sessions page for more information on previous projects, participants, and presentations.

2023 Projects

Project #1

Title: AI-Mediated Face-to-Face Communication

Mentors: Zhen Bai (Computer Science)

Face-to-face communication is central to human life. Language barriers and social difficulties, however, often lead to inaccessible or inequal communication. Over 90% of Deaf and Hard of Hearing (DHH) children in the US are born to hearing parents who don’t know sign language – the natural language for DHH infants at birth. People with autism and from marginalized gender, racial, and ethnic groups often have difficulty engaging in group conversation. In this project, we seek to create AI-Mediated Communication Technologies that make communication more accessible and inclusive during one-on-one and small group interaction. We look for students with an interest and/or experience in one or more of the following areas: Augmented and Virtual Reality, Human-Computer Interaction, Natural Language Processing and Machine Vision, to take part in the design, prototyping, and evaluation of next generation AI-Mediated Communication Technologies. Example research papers include: “Signing-on-the-Fly: Technology Preferences to Reduce Communication Gap between Hearing Parents and Deaf Children,” and “Context-responsive ASL Recommendation for Parent-Child Interaction.”

Project #2

Title: Continual Learning in Deep Neural Networks of Audio-Visual Data

Mentor:Christopher Kanan (Computer Science)

Conventional deep learning systems are trained on static datasets and then the system is kept fixed after learning. In contrast, humans and animals learn continuously over their lifetimes. Continual learning aims to develop systems that mimic this ability. While enormous progress has been in continual learning for uni-modal domains, e.g., static image datasets, little work has been done in continual learning of videos or continuous learning of visual and auditory information simultaneously. Our lab has created multiple state-of-the-art brain-inspired systems for continual learning in deep neural networks with vision datasets, and this project aims to extend this work to create systems capable of audio-visual continual learning. We will first study continual learning of audio data, which is relatively unexplored, before studying audio-visual continual learning. The REU student will be trained in machine learning, continual learning, and conducting research in artificial intelligence. Two REU students have worked in the lab previously, which resulted in both of them publishing peer-reviewed papers and later being accepted into PhD programs.

Project #3

Title: Analyzing Time-lapse Videos of 3D Printing

Mentors: Sreepathi Pai, Chenliang Xu, and Yuhao Zhu (Computer Science)

Time-lapse videos of 3D printing are a popular, mesmerising recording of the layer-by-layer printing process of most consumer 3D printers. Although these videos are primarily used currently for entertainment, we wish to explore the use of similar videos for quality control of 3D prints. We propose to overlay information on these videos that establish traceability over the entire 3D printing process. We want to track every piece of extruded plastic back to the STL file that describes the 3D model, the GCODE program that describes the print head's path, the generated kinematics for the electro-mechanical components such as motors as well as the environment and filament characteristics that affect the print. Such an end-to-end view will enable novel debugging and metrology procedures, improve rigor of experimental methods, and allow 3D printers to produce certificates of quality as soon as a part is printed reducing costs and increasing applicability. Our methods will use compiler/programming language techniques, computer vision, and processing of 3D point cloud information.

Project #4

Title: Public Perceptions of Synthetic Cooling Agents in Electronic Cigarettes on Twitter

Mentor: Dongmei Li (Public Health Sciences, UR Medical Center)

According to the 2022 National Youth Tobacco Survey (NYTS), about 2.55 million U.S. youth, including 14.1% of high school students and 3.3% of middle school students, currently use electronic cigarettes (e-cigarettes). Recently, synthetic cooling agents such as WS-3 and WS-23 have been included in e-cigarettes to generate a cooling sensation to reduce airway irritation and harshness from nicotine for e-cigarette users without the minty odor. Many e-cigarettes on the market, especially disposable e-cigarettes, contain synthetic cooling agents in their “ice” flavored products at levels exceeding the safety threshold. Twitter is a popular social media platform used to examine the public perceptions and discussions of tobacco products. We aim to investigate the public perceptions and discussion of synthetic cooling agents in e-cigarettes on Twitter using natural language processing and advanced artificial intelligence techniques. We will select synthetic cooling agents-related tweets using keywords such as “ice”, “WS-3/WS-23”, “Kool/Cool” etc. We will use Valence Aware Dictionary and sEntiment Reasoner (VADER), a commonly used sentiment analyzer, to examine the public perceptions of the synthetic cooling agents in e-cigarettes. The topics discussed will be identified using the latent Dirichlet allocation (LDA) and BERT topic models. To examine the potential difference in public perceptions in various demographic groups, we will estimate the age and gender of Twitter users with profile pictures using computer vision and deep learning algorithms such as Face++ API and Ethnicolr. Results from our study will provide important information to FDA for future regulation actions on e-cigarettes.

Project #5

Title: The Impact of Musical Features and Expertise on Summary Statistical “Gist” Processing of Music

Mentor: Elise Piazza (Brain and Cognitive Sciences), David Temperley (Eastman School of Music)

We are constantly bombarded with sensory information in our everyday lives. In order to more efficiently process large groups of stimuli, the brain processes the “gist”. In the visual sense, gist can be processed quickly and accurately for a number of features such as size, color, motion direction, and face emotion. In the auditory sense, listeners can estimate the mean pitch across a sequence of tones. However, it is crucial to understand how listeners compute summary statistics from more ecologically valid stimuli, like music. Our lab has begun to do this, using short, computer-generated melodic sequences in which tonality and metrical distribution are manipulated to create clips that are more and less melodic. Participants in an online study listened to each melody and judged if a probe tone was higher or lower than the mean pitch. REU students will have opportunities to apply computational analyses to better understand the data, including building models to predict summary statistical estimates from different musical features in the stimuli, as well as to discriminate musicians’ vs. non-musicians’ patterns of responses. Students will also have the chance to implement some follow-up studies which will vary additional musical features, use multimodal (audio-visual) stimuli, and test gist processing within the context of speech.

Project #6

Title: Modeling Brain Responses During Naturalistic Music Performance

Mentor: Elise Piazza (Brain and Cognitive Sciences), Samuel Norman-Haignere (Neuroscience)

Music performance is a complex behavior requiring the coordination of the visual, auditory, and motor systems to read music from a page, incorporate auditory feedback from notes already played, and produce the correct motor output. The proposed project will focus on analysis of a new fMRI dataset of expert pianists playing naturalistic music on a three-octave keyboard in the scanner. Due to the complexity of naturalistic music as a stimulus, we must use non-traditional analysis approaches to understand how the brain processes the stimulus and produces motor output. Students working on this project will have the opportunity to implement one or more analysis methods inspired by previous studies in naturalistic music perception. Potential analyses include: (1) intersubject correlation using whole-brain searchlight and region-of-interest approaches, (2) functional connectivity between auditory and motor areas, (3) an encoding model approach, potentially with component methods like ICA or shared response modeling (SRM), and (4) segmentation of neural data into events using hidden Markov models. These analyses seek to answer different questions about how stimulus-driven neural responses are shared across subjects (1), how auditory and motor areas work together to support music performance (2), and how different brain areas respond to different features of the music (3) or hierarchical structure in the stimulus (4). Students may also develop and implement analyses that focus more on the motor production aspect of this dataset.

2023 Participants

| Ashley Bao | Amherst College |

| Sophia Cao | University of Michigan |

| Sophia Caruana | Nazareth College |

| Jesus Diaz | New Mexico State University |

| Julia Hootman | Xavier University |

| Andrew Liu | University of North Carolina at Chapel Hill |

| Shaojia (Emily) Lu | Wellesley College |

| Kaleb Newman | Brown University |

| Sangeetha Ramanuj | Oberlin College |

| Liliana (Lili) Seoror | Barnard College |